Risk-Sensitive Reinforcement Learning for Designing Robust Low-Thrust Interplanetary Trajectories

Abstract

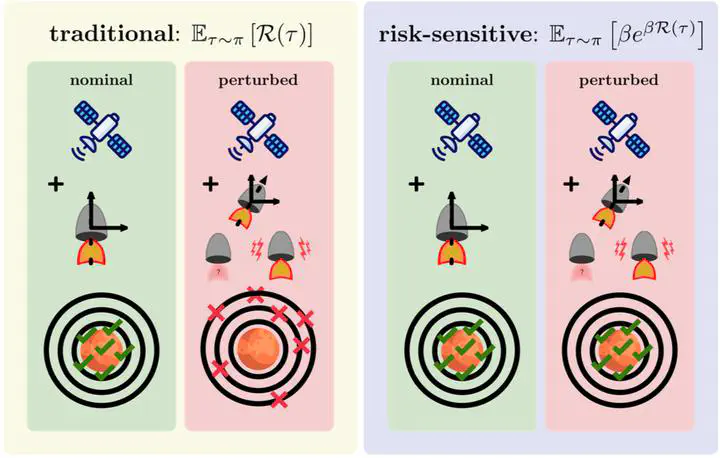

In recent years, small spacecraft have been increasingly proposed for interplanetary missions due to their cost-effectiveness, rapid development cycles, and ability to perform complex tasks comparable to larger spacecraft. However, these benefits come with trade-offs, as limited budgets often necessitate using components with low technological readiness, increasing the risk of control execution errors occurring from misaligned thrusters, actuator noise, and missed-thrust events. Recently, Reinforcement learning (RL) has emerged as a promising approach for designing robust trajectories that account for these errors. However, existing methods de- pend on prior knowledge of these errors to construct training simulations, restricting their ability to generalize to unforeseen issues. To overcome this limitation, we propose using Risk-Sensitive Reinforcement Learning (RSRL) to train policies that remain robust to control execution errors without requiring prior knowledge of their exact nature, enhancing practicality for real-world missions. In particular, we build our RSRL algorithm on top of the Proximal Policy Optimization (PPO) RL algorithm by replacing its risk-neutral objective with the risk-sensitive exponential criterion. We evaluate our RSRL algorithm, RS-PPO, by comparing its performance against PPO in an interplanetary transfer from Earth to Mars, where both are trained in an error-free environment but tested under various control execution errors.