MW-NeRF: Multi-Wavelength Nerf Models For Spacecraft Modeling In Shadowed Environments

Abstract

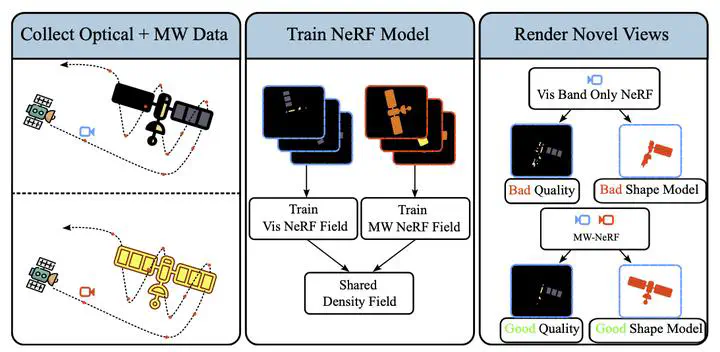

The future of Neural Radiance Fields (NeRFs) within aerospace applications relies heavily on their ability to cope with the hostile observing conditions that permeate space-based operations. While existing NeRF models can handle many real-world scenes with extremely fine detail, they still lack robustness to the extreme lighting conditions present outside of Earth’s atmosphere. While optical light may not contain all the information required to faithfully reconstruct a scene, we propose that this problem can be tackled by creating a new NeRF architecture that can process images taken at different wavelengths, where vital shape information may be readily available. This work explores our new proposed model, multi-wavelength NeRF (MW-NeRF), along with a large realistic dataset suite containing simple to complex satellite geometries with multiple different lighting conditions, taken over several different target ranges. Early analysis of our model shows that dark regions in these datasets prove difficult for optical training alone to overcome, and that better underlying shape models can be learned when provided with multi-wavelength datasets.